Defining technologies via nonlinear orthography

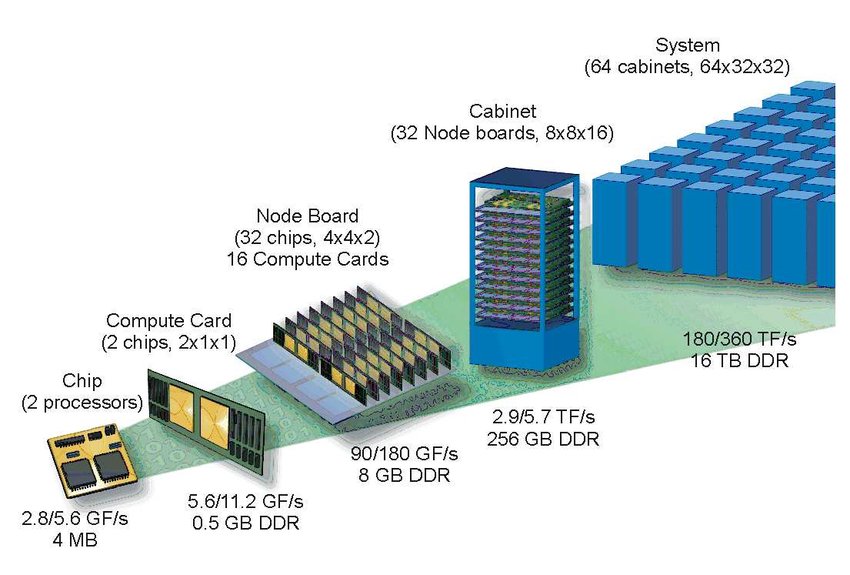

The image below shows a supercomputer architecture’s high-level, modular layout and illustrates the typical hierarchical process that characterizes how we currently engineer technologies. The system is created by a multi-level assembly process starting from the left (chips, bottom level) to the final structure (right, arrays of cabinets). Each level comprises a specific series of components (CPU, computer cards, boards,…) that assembles from the previous levels. In this approach, implementing a single level requires all the inner levels to be correctly defined. Building one layer (e.g., the computer cards) is impossible if we have not entirely defined and characterized the components at the inner level (i.e., the processor chips).

The level decomposition in the figure does not stop at the chips. Each processor is further decomposed into an inner hardware-level composed of individual integrated transistors (see figure below), representing the entire system’s basic building block. Elements at higher levels (chip, cards,…) replicate millions of identical copies of this design structure. The complete system works because the essential building elements (i.e., the transistors) are the same. The system does not work if these copies are not identical (within some tolerances).

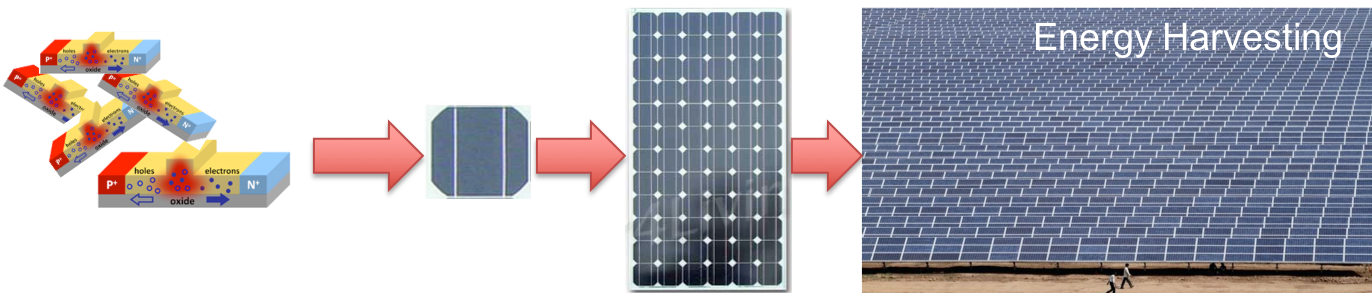

This hierarchical organization does not apply only to electronics, but is a general building approach adopted in any technological platform currently available, e.g., in energy harvesting:

We observe the same structure: a system built from basic identical building blocks and composed of PIN junctions, which harness light into electron-hole pairs that generate electric power. Identical PIN junctions are assembled at higher levels into solar panels and then replicated into a large-scale solar harvesting farm.

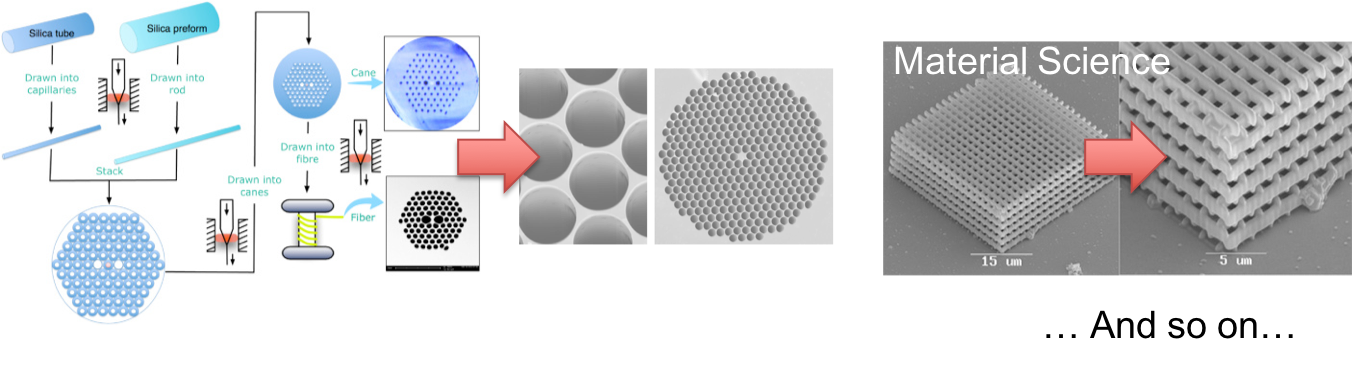

Another example from nanomaterials engineering:

We again observe the same periodic assembly of identical copies of basic building blocks, composed of different lattice structures of air holes realized on a semiconductor or oxide substrate. The final structures work if the periodic assembly of the identical units is accurate and break if the system lacks long-range order.

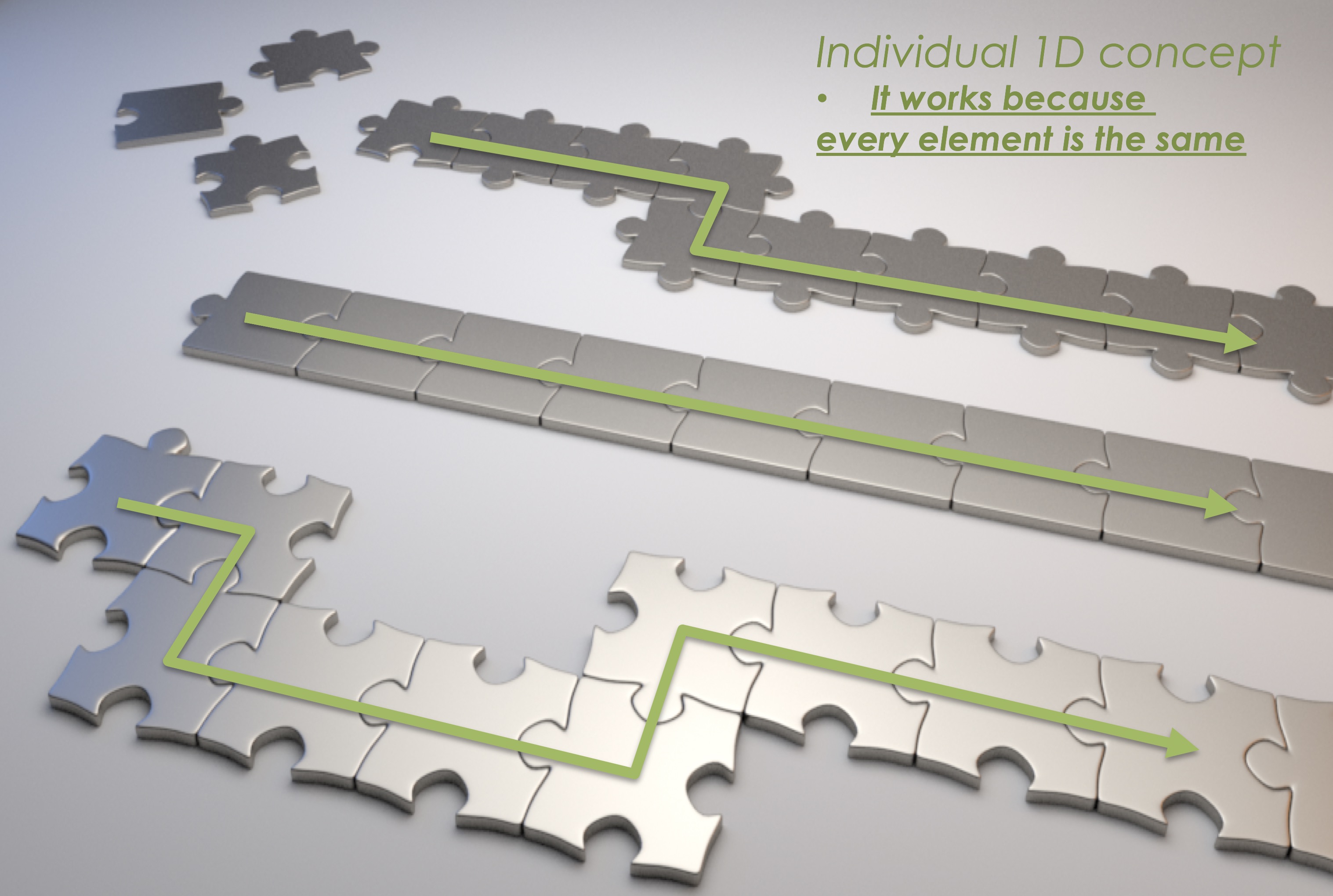

It is not difficult to continue with other examples and show that practically all technologies we produce today follow the same approach. The linear, hierarchical copy of individual building blocks, assembled into multi-level structures in which each level has specific rules, with well-defined sets of tasks and behaviors. We can represent this process by a one-dimensional puzzle, which is assembled piece by piece (i.e., level by level) and using an identical piece every time (i.e., building block). We do not insert a piece if all the previous pieces are not in place:

This picture visually illustrates the many drawbacks of this linear approach:

- Fragility: the system breaks if any piece is missing or is not identical to the others

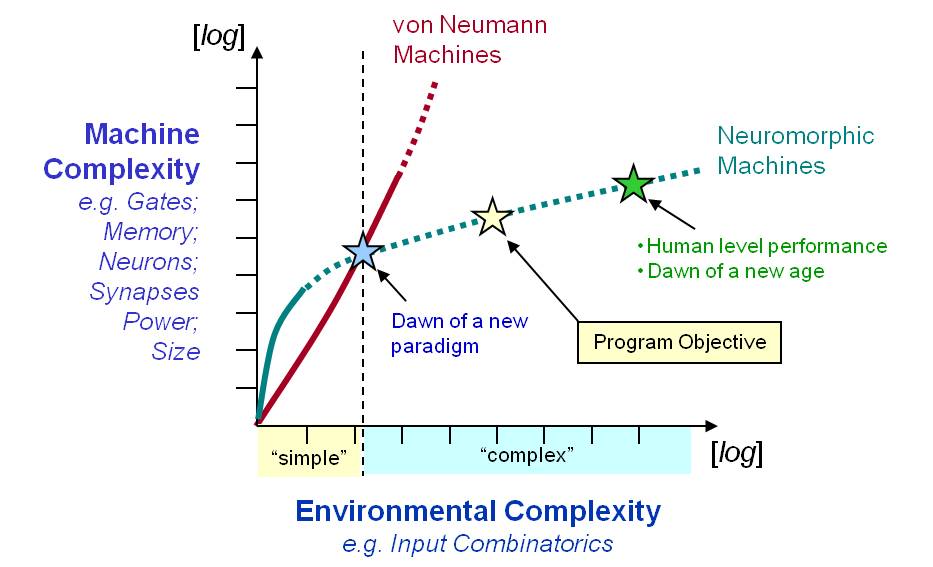

- Lack of efficiency and unsustainable development: the system output increases linearly with the number of pieces. It implies that any system feature (computational power, energy harvesting efficiency, power consumption…) scales linearly with the system size. At a certain point, it makes it impossible for the system to sustain an improvement. The image below (DARPA Synapse project at http://www.artificialbrains.com/darpa-synapse-program) illustrates this issue in computer science: the projection for energy demand required by standard machines increases too high, and a sharp paradigm shift is required to sustain technological progress in this field.

What is the origin of this engineering approach? An interesting perspective on this question comes from linguistics theories:

We have the same word for falling snow, snow on the ground, snow packed hard like ice, slushy snow, wind-driven flying snow—whatever the situation may be….To an Eskimo, this all-inclusive word would be almost unthinkable; he would say that falling snow, slushy snow, and so on, are sensuously and operationally different, different things to contend with; he uses different words for them and for other kinds of snow….We dissect nature along lines laid down by our native languages,…The grammar of each language is not merely a reproducing instrument for voicing ideas but rather is itself the shaper of ideas.

Benjamin L. Whorf, “Science and Linguistics”, MIT technology Review 42, 229-231 (1942)

In the theory of “linguistic relativity” introduced by Whorf in the seminal paper cited above, the language we speak affects the speaker world’s view and influences our thoughts and cognition. Since babies, the language we are taught influences how neurons connect and determine how we perceive reality. In this view, different populations of humans developed different languages because of how they “dissect” and perceive Nature. Critics of this theory argue that it is the other way around: languages are the product of how we think and see the world with our cognitive senses. Both perspectives are attractive to our discussion: they point to language as the expression of our natural form of thinking.

Despite various cultures on the planet developed languages that sound and read differently, they all possess a common point: they are all based on linear orthography:

I am writing a sentence in the English language.

Independently from our language, we write letter by letter (i.e., piece by piece, level by level). We assemble letters into hierarchical structures (words, sentences, paragraphs,…). We write sequentially: we do not place a letter or a word if all the previous words are not in place with an understood meaning we intend to convey. Our orthography mirrors precisely the way we build technologies. If the form of thinking we developed is linear, as our orthography, no surprise that this is the natural tendency to express our minds, either in the language or in the realization of technological products.

Complex natural systems, however, show us that there are other intriguing paths to follow. These systems originate from millions of years of adaptations and leverage a much more evolved concept: “nonlinear orthography” linguistics.

“…Unlike all human written language, their writing is symbolic. It conveys meaning, it does not represent sound. Perhaps they view our form of writing as a wasted opportunity, passing every second communications channel. We have our friends in Pakistan to thank for their study on how the heptapods write. Because unlike speech, a hologram is free of time. Like their ship or their bodies. Their written language has no form or no direction. Linguists call this nonlinear orthography. Which raises the question: “Is this how they think?” Imagine you wanted to write a sentence using two hands, starting from either side. You’d have to know each word you wanted to use, as well as how much space they will occupy. A heptapod can write a complex sentence in two seconds effortlessly. It took us a month to make the simplest reply…”

Ian Donnelly, (Arrival, 2016)

Complex systems use a “nonlinear orthographic” design platform assembling all the system’s parts in parallel, with an overall functionality conceptually defined within a single design step. This analysis perhaps illustrates the main challenges to imitating these systems: it implies we understand a form of orthography that is not innate and, at such, conceptually difficult and “complex” to grasp.

A first step to overcome this problem is observing that such difficulty, or complexity, is perhaps only a mental projection. To a complex system, a “nonlinear orthographic” design concept is probably the simplest, and therefore the more natural approach to achieve the following factors:

- Robustness: even if a single element breaks, the system can re-route the information into a structure connected in a multidimensional space and, as such, it significantly reduces failures and maintain its functionality even under strong stress conditions.

- Scalability: the system does not use periodic copies of identical objects and, as such, can be manufactured on large scales easily.

- Efficiency: the system response originates from the parallel interaction of many elements and scales exponentially with the system size. This feature is a great advantage compared to linear systems, which scale linearly and offer highly inferior performances.

- Sustainability: exponential efficiency implies that a complex system tends to dissipate only a limited amount of resources, and as such, it possesses a high degree of sustainable development. Read more on these properties here.

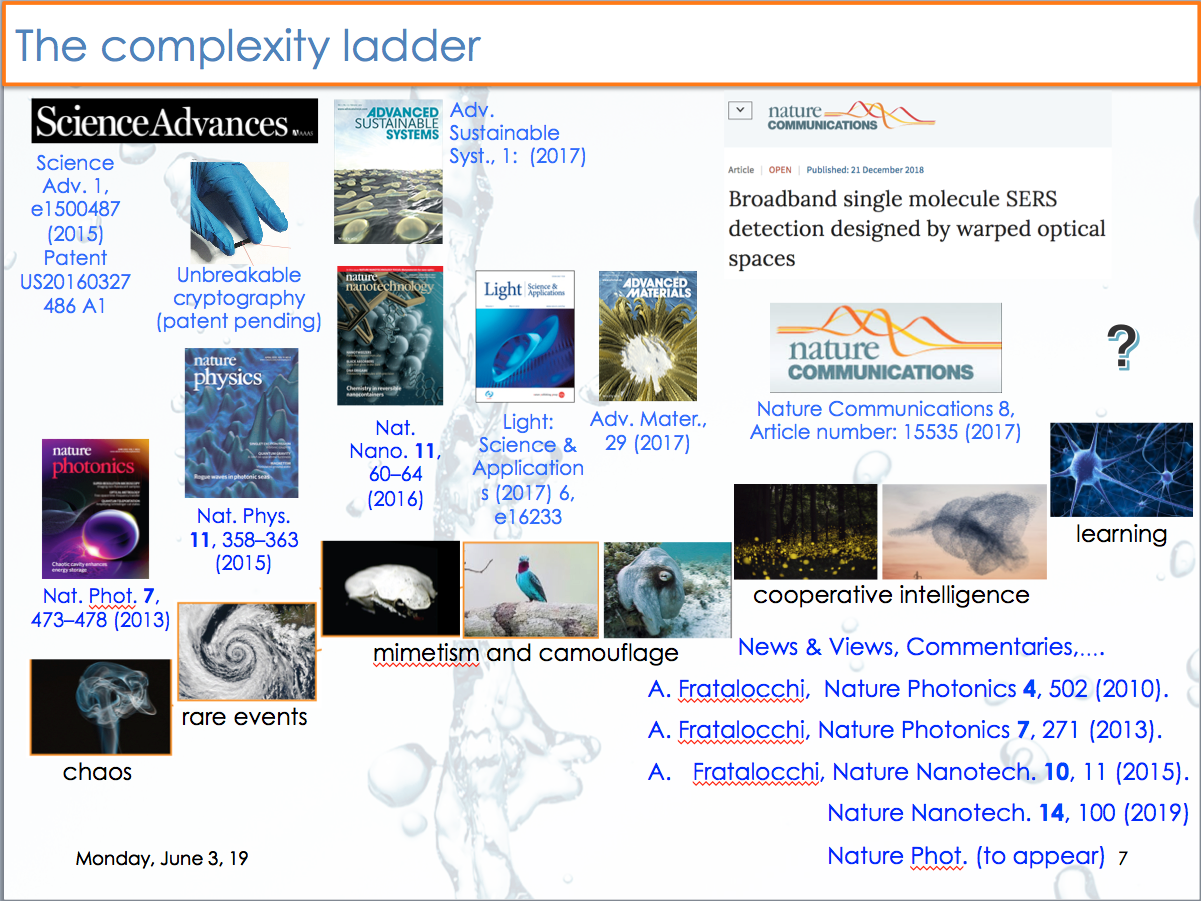

At Primalight, we proceed step by step on the complexity ladder: a ladder of different forms of complexity stemming from the most studied to the most difficult to understand, developing different technological products from energy devices to smart materials, bio-imaging, security, and beyond. This research is not a bio-mimicry (we do not copy complex systems). It is the understanding of the rules of complex systems for “writing” technologies via nonlinear orthography, following an evolutionary-driven design paradigm for obtaining high levels of efficiency, sustainability, and robustness in scalable platforms.